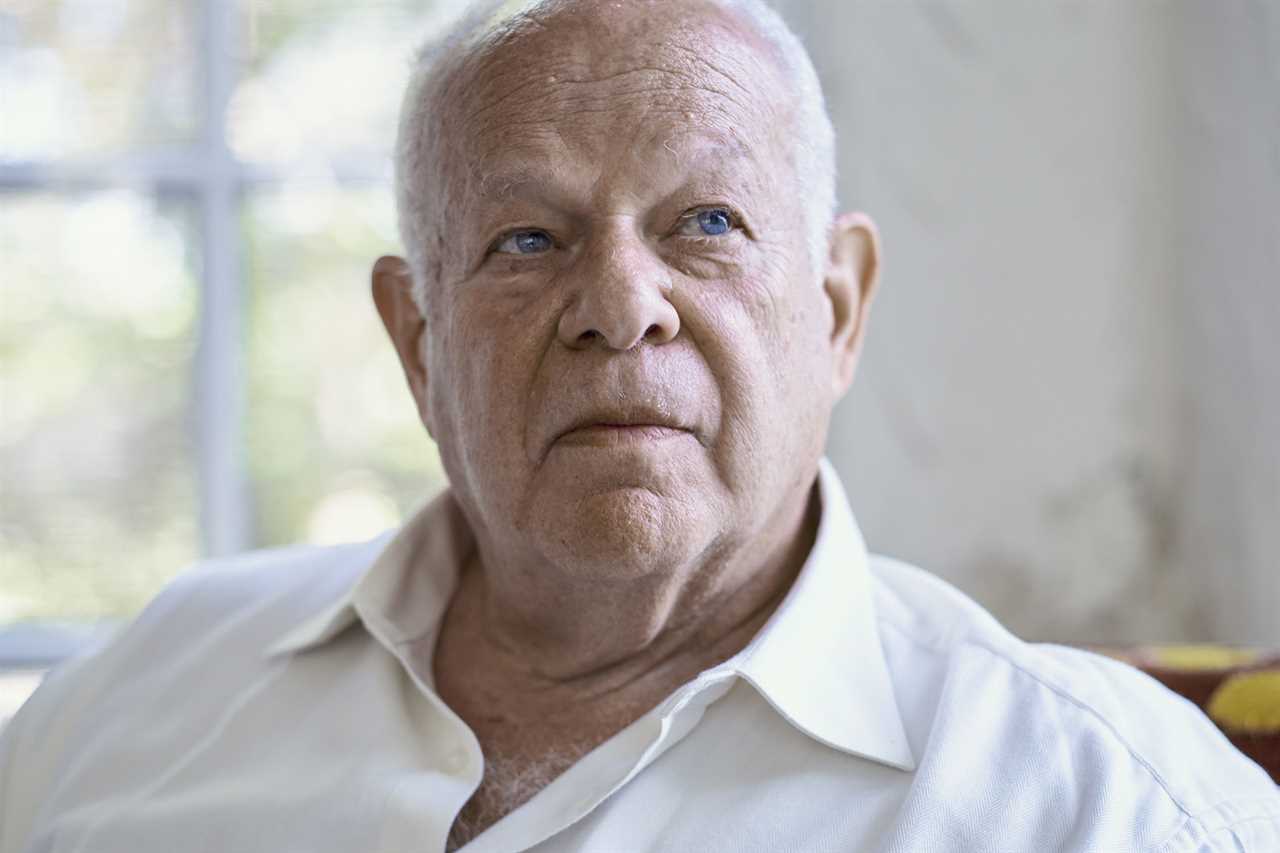

Martin Seligman, the influential American psychologist, found himself pondering his legacy at a dinner party in San Francisco one late February evening. The guest list was shorter than it used to be: Seligman is 81, and six of his colleagues had died in the early Covid years. His thinking had already left a profound mark on the field of positive psychology, but the closer he came to his own death, the more compelled he felt to help his work survive.

The next morning he received an unexpected email from an old graduate student, Yukun Zhao. His message was as simple as it was astonishing: Zhao's team had created a “virtual Seligman.”

Zhao wasn’t just bragging. Over two months, by feeding every word Seligman had ever written into cutting-edge AI software, he and his team had built an eerily accurate version of Seligman himself — a talking chatbot whose answers drew deeply from Seligman’s ideas, whose prose sounded like a folksier version of Seligman’s own speech, and whose wisdom anyone could access.

Impressed, Seligman circulated the chatbot to his closest friends and family to check whether the AI actually dispensed advice as well as he did. “I gave it to my wife and she was blown away by it,” Seligman said.

The bot, cheerfully nicknamed “Ask Martin,” had been built by researchers based in Beijing and Wuhan — originally without Seligman’s permission, or even awareness.

The Chinese-built virtual Seligman is part of a broader wave of AI chatbots modeled on real humans, using the powerful new systems known as large language models to simulate their personalities online. Meta is experimenting with licensed AI celebrity avatars; you can already find internet chatbots trained on publicly available material about dead historical figures.

But Seligman’s situation is also different, and in a way more unsettling. It has cousins in a small handful of projects that have effectively replicated living people without their consent. In Southern California, tech entrepreneur Alex Furmansky created a chatbot version of Belgian celebrity psychotherapist Esther Perel by scraping her podcasts off the internet. He used the bot to counsel himself through a recent heartbreak, documenting his journey in a blog post that a friend eventually forwarded to Perel herself.

Perel addressed AI Perel’s existence at the 2023 SXSW conference. Like Seligman, she was more astonished than angry about the replication of her personality. She called it “artificial intimacy.”

Both Seligman and Perel eventually decided to accept the bots rather than challenge their existence. But if they’d wanted to shut down their digital replicas, it’s not clear they would have had a way to do it. Training AI on copyrighted works isn't actually illegal. If the real Martin had wanted to block access to the fake one — a replica trained on his own thinking, using his own words, to produce all-new answers — it’s not clear he could have done anything about it.

AI-generated digital replicas illuminate a new kind of policy gray zone created by powerful new “generative AI” platforms, where existing laws and old norms begin to fail.

In Washington, spurred mainly by actors and performers alarmed by AI’s capacity to mimic their image and voice, some members of Congress are already attempting to curb the rise of unauthorized digital replicas. In the Senate Judiciary Committee, a bipartisan group of senators — including the leaders of the intellectual property subcommittee — are circulating a draft bill titled the NO FAKES Act that would force the makers of AI-generated digital replicas to license their use from the original human.

If passed, the bill would allow individuals to authorize, and even profit from, the use of their AI-generated likeness — and bring lawsuits against cases of unauthorized use.

“More and more, we’re seeing AI used to replicate someone’s likeness and voice in novel ways without consent or compensation,” said Sen. Amy Klobuchar (D-Minn.) wrote to POLITICO, in response to the stories of AI experimentation involving Seligman and Perel. She is one of the co-sponsors of the bill. “Our laws need to keep up with this quickly evolving technology,” she said.

But even if NO FAKES Act did pass Congress, it would be largely powerless against the global tide of AI technology.

Neither Perel nor Seligman reside in the country where their respective AI chatbot developers do. Perel is Belgian; her replica is based in the U.S. And AI Seligman is trained in China, where U.S. laws have little traction.

“It really is one of those instances where the tools seem woefully inadequate to address the issue, even though you may have very strong intuitions about it,” said Tim Wu, a legal scholar who architected the Biden administration's antitrust and competition policies.

Neither AI Seligman nor AI Perel were built — at least originally — with a profit motive in mind, their creators say.

Contacted by POLITICO, Zhao said he built the AI Seligman to help fellow Chinese citizens through an epidemic of anxiety and depression. China’s mental health policies have made it very difficult for citizens to get confidential help from a therapist. And the demand for mental health services in China has soared over the past three years due to the stresses imposed by China’s now-abandoned draconian ”zero Covid” policy.

As vice director of Tsinghua University’s Positive Psychology Research Center in China, Zhao now researches the same branches of psychology his graduate adviser had pioneered. The AI Seligman his team had built — originally trained on 15 of Seligman’s books — is a way to bring the benefits of positive psychology to millions in China, Zhao told his old teacher.

Similarly, when Perel the human wasn’t available, a public version of the AI software driving ChatGPT and Perel’s podcasts allowed Furmansky to build the next best thing. He saw the same potential in AI that Zhao did: a means to access the knowledge locked away in the brains of a few really brilliant people.

Furmansky said he and Perel's team were on cordial terms regarding AI Perel, and had spoken about pursuing something more collaborative. Contacted by POLITICO, Perel's representative said Perel has not “endorsed, encouraged, enabled Furmansky or waived any of her rights to take legal action against Furmansky.” They declined to provide further details about her stance on the AI Perel.

Others don’t necessarily share Seligman's sanguine views about AI’s capacity to replicate the vocal and intellectual traits of real people — particularly without their knowing consent. In June, a shaken mother recounted the harrowing details of a scammer who used AI to mimic her child’s voice at a Senate hearing. In October, anxious artist unions beseeched the Federal Trade Commission to regulate the creative industry’s data-handling practices, pointing to the dystopian possibility of vocalists, screenwriters and fashion models competing against AI models that look and sound like them.

Even Wu — a seasoned scholar of America’s complicated tech economy — held a straightforward view on the unsanctioned use of AI to impersonate humans. “My instinct is strong and immediate on it,” Wu said. “I think it's unethical and I think it is something close to body snatching.”

Lawmakers are also worried about who, ultimately, will reap the tangible and intangible profits of American intellectual property in the age of AI. “Neither Big Tech nor China should have the right to benefit from the work of American creators,” said Sen. Marsha Blackburn (R-Tenn.), another co-sponsor of the NO FAKES Act, in an email response to Seligman’s story.

Seligman's American citizenship has not stopped him from being something of a hero in the world of Chinese psychology. His theories on well-being — which carry the gravitas of global scientific credibility — are embedded in Chinese education policies from kindergarten onwards. Grade-school children there know who he is. And Zhao believes Seligman's popularity will help his mental health AI “coach” stand out in the Chinese market. “Marty has a big brand name,” Zhao said. “With his endorsement, a lot of people would come to use it, at least out of curiosity.”

But even for the Chinese citizens who Zhao hopes to help, there’s a risk — one that exposes another facet of the new landscape of AI.

To talk to the chatbot, people type or speak into their computers, sharing confidential information about their lives. In the U.S., that would raise the question of which tech companies are listening and possibly selling that data bout you.

In China, where the government is deeply concerned with monitoring citizens’ thoughts, there’s a far more immediate risk. The ruling Chinese Communist Party's pervasive electronic surveillance policies mean Chinese users of the virtual Seligman may unwittingly be sharing their thoughts with authorities — who could access or interpret it as criticism of the one-party state.

Beijing has long wielded false diagnoses of mental illness to target dissidents with arbitrary detention in state-run institutions. The virtual Seligman could provide Chinese authorities a potential treasure trove of information on its citizens’ innermost thoughts.

With AI-generated digital replicas poised to enter the mainstream market from Hollywood to Chinese app stores, U.S. policymakers are running out of time to determine the rules surrounding their use. Since that first phone call with Zhao, Seligman says, other AI companies have reached out to him about licensing his library of work to them. “I told them, no, I'm not willing to do it because I don't know you. I don't trust you,” he said. Zhao is the exception, Seligman told POLITICO, because of their long friendship.

Seligman is no stranger to powerful government forces co-opting his work — his research on learned helplessness was used, without his knowledge, to develop the CIA’s “enhanced interrogation” techniques post 9/11. But after numerous experiments with his AI counterpart, he thinks virtual Seligman will do more good than harm in the world.

“It is enchanting to me,” he said, sitting in his study in Philadelphia, “that what I've written about and discovered over 60 years in psychology could be useful to people long after I was dead.”

Seligman leaned forward in his chair, as if divulging a secret. “That kind of legacy seems to me as close to immortality as a scientist can come.”

Phelim Kine contributed to this report.

----------------------------------------

By: Mohar Chatterjee

Title: ‘Something Close to Body Snatching’: A New Kind of AI Person Arrives

Sourced From: www.politico.com/news/magazine/2023/12/30/ai-psychologist-chatbot-00132682

Published Date: Sat, 30 Dec 2023 07:00:00 EST