By BRYAN CARMODY

If you’re involved in medical education or residency selection, you know we’ve got problems.

And starting a couple of years ago, the corporations that govern much of those processes decided to start having meetings to consider solutions to those problems. One meeting begat another, bigger meeting, until last year, in the wake of the decision to report USMLE Step 1 scores as pass/fail, the Coalition for Physician Accountability convened a special committee to take on the undergraduate-to-graduate medical education transition. That committee – called the UME-to-GME Review Committee or UGRC – completed their work and released their final recommendations yesterday.

This isn’t the first time I’ve covered the UGRC’s work: back in April, I tallied up the winners and losers from their preliminary recommendations.

And if you haven’t read that post, you should. Many of my original criticisms still stand (e.g, on the lack of medical student representation, or the structural configuration that effectively gave corporate members veto power), but here I’m gonna try to turn over new ground as we break down the final recommendations, Winners & Losers style.

–

LOSER: BREVITY.

The UGRC recommendations run 276 pages, with 5 appendices and 34 specific recommendations across 9 thematic areas. It’s a dense and often repetitive document, and the UGRC had the discourtesy to release it in the midst of a busy week when I didn’t anticipate having to plow through such a thing. But this is the public service I provide to you, dear readers.

–

WINNER: COMMITTEES AND FURTHER STUDY.

All told, the UGRC recommendations aren’t bad.

Some – like Recommendation #11 below – are toothless but hard to disagree with, and many of the recommendations that are more specific don’t go as far as I’d like.

But most of the recommendations are good, and almost all are thoughtful and offer the promise of improvement… someday, at some indefinite point in the future (after futher study and additional consideration by more special committees, of course).

There are a few immediately actionable recommendations (such as the proposal for exclusively virtual interviews for the 2021-2022 season) which are unlikely to generate much controversy. And there are a handful of others that ought to inspire more debate.

–

WINNER: OSTEOPATHIC MEDICAL STUDENTS.

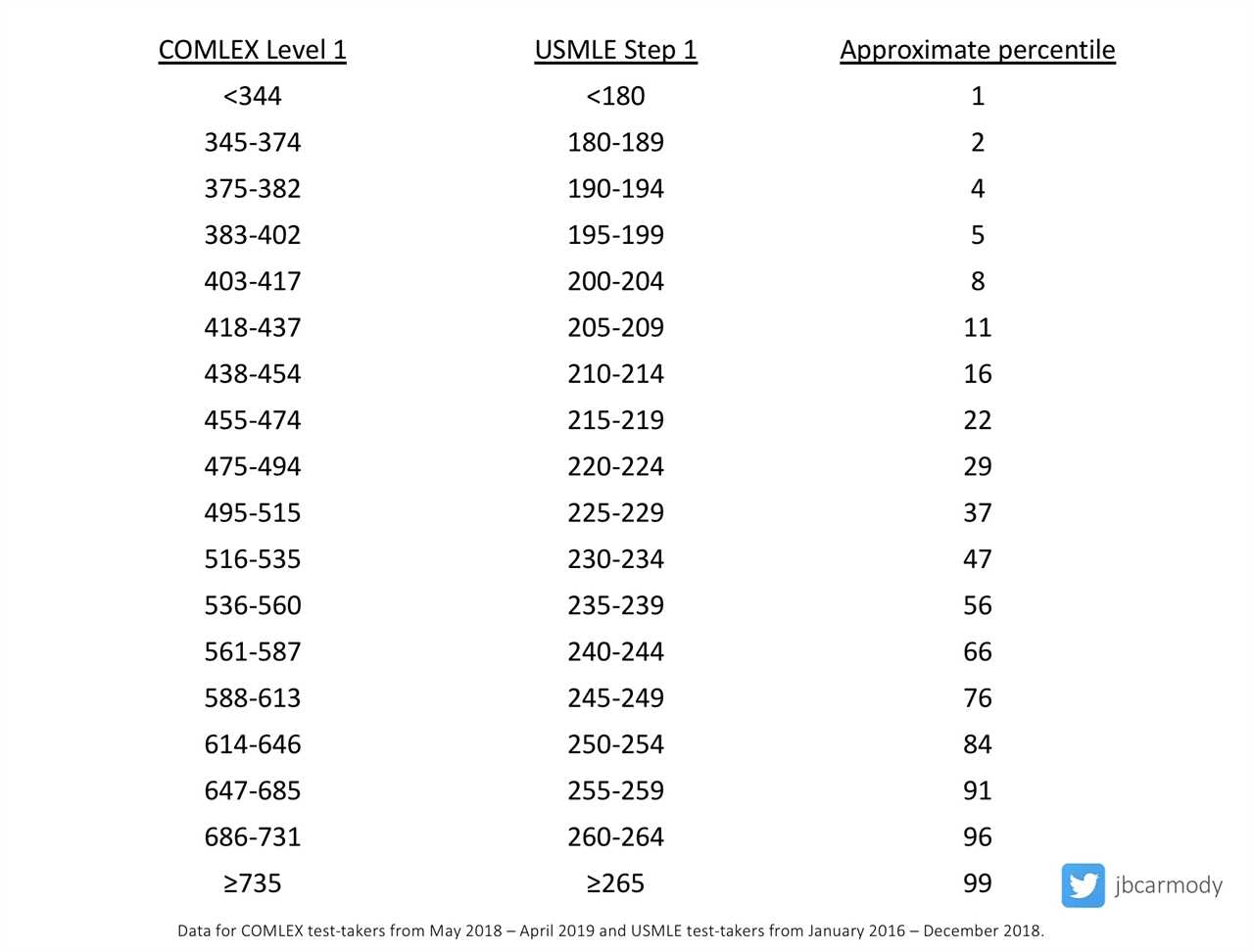

Currently, around three quarters of osteopathic medical students take both the USMLE and COMLEX-USA. In almost all states, either one is sufficient for licensure – but residency program directors strongly prefer the USMLE.

The de facto requirement for DO students to take two licensing exams is expensive and wasteful, and I’m on record as opposing it. If program directors could assess relative exam performance at a glance – through use of a single normalized score – many fewer DOs would feel compelled to take the USMLE.

(It’s worth pointing out, however, that the National Board of Osteopathic Medical Examiners (NBOME) doesn’t need the UGRC in order to make COMLEX-USA and USMLE scores more easily comparable: all they’d have to do is replace their ‘distinctive’ 200-800 score range with the 300 point scale more familiar to most program directors.)

–

LOSER: MDS AND IMGS.

The problem with treating COMLEX-USA and USMLE scores the same is that not everyone can take either exam. Although DO students are allowed to sit for the USMLE, MDs and international medical graduates (IMGs) are not eligible to register for COMLEX-USA.

Put another way, a DO student who is unhappy with his/her COMLEX-USA score might choose to take the USMLE to try to improve it – but an MD or IMG gets only one shot.

But that’s not the only bad news for MDs and IMGs.

Although there is wide individual variation, DO examinees overall score ~20 percentile points lower on the USMLE than they do on COMLEX-USA.

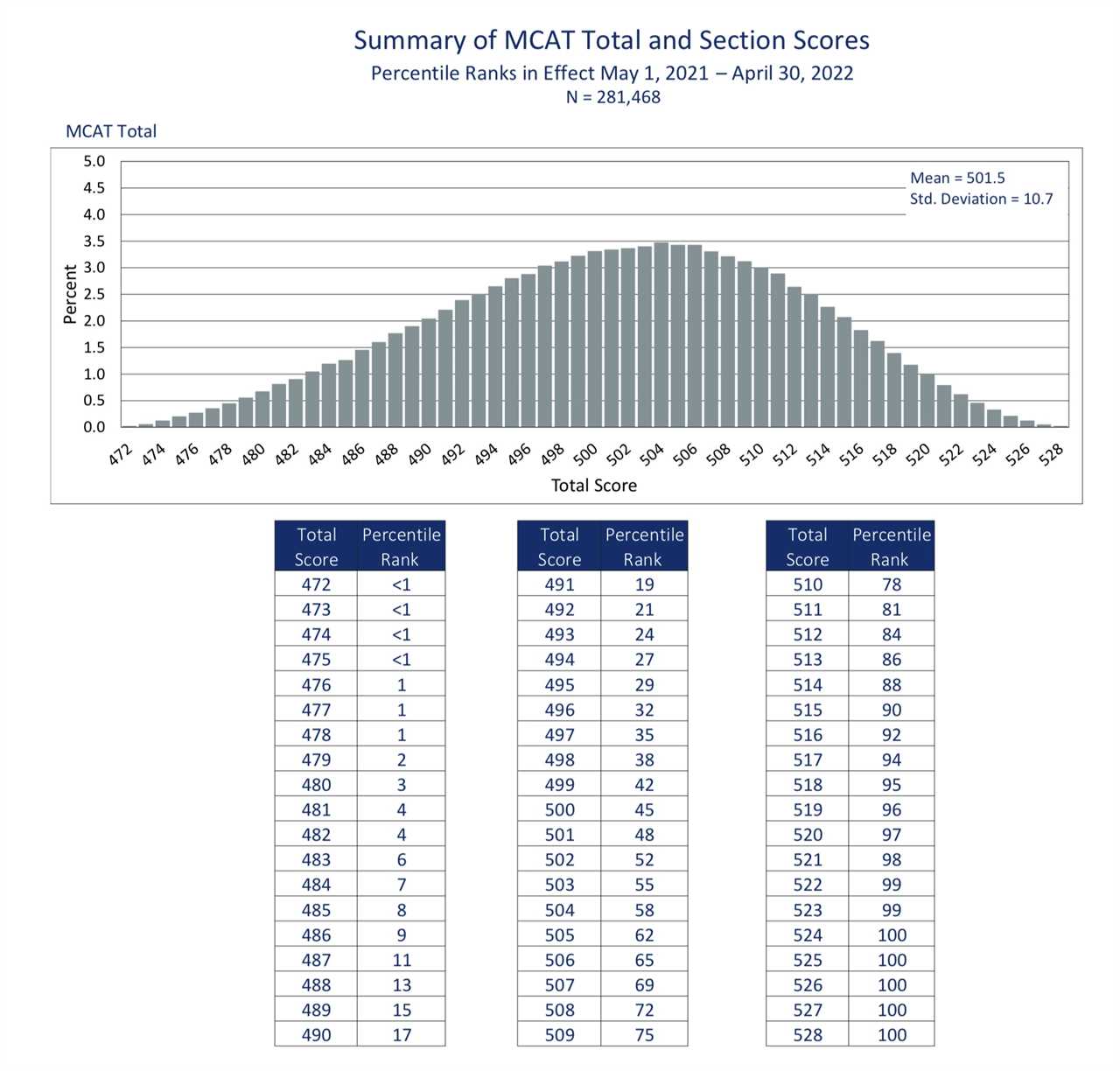

This mirrors pre-existing differences in standardized test performance between DO and MD medical students: the average MD student scores around the 81st percentile for MCAT performance, while the average osteopathic matriculant scores around the 58th.

In other words, to achieve the normalized score that will appear on ERAS, DO students taking COMLEX-USA compete against a somewhat less-accomplished group of standardized test takers than MDs and IMGs taking the USMLE.

(This potential source of unfairness could, of course, be rectified by allowing MDs and IMGs the option of taking COMLEX-USA.)

–

WINNER: THE NATIONAL BOARD OF OSTEOPATHIC MEDICAL EXAMINERS.

Recently, the NBOME has faced increasing questions about whether maintaining a ‘separate but equal’ licensing exam for DOs does more harm than good. I’ve argued – in direct response to a letter from the CEO of the NBOME himself – that all physicians should just take the USMLE, and that the NBOME should limit their exams to assessing specifically osteopathic competencies. Obviously, this kind of thinking presents an existential threat to the NBOME.

But if Recommendation #18 succeeds in establishing the COMLEX-USA as being equivalent to the USMLE for residency selection, the NBOME’s existence is all but assured – and they’ll find themselves in the enviable business position of selling exams to the rapidly-growing captive audience of osteopathic medical students for years to come. (You may therefore be unsurprised to learn that the NBOME’s CEO was a member of the specific UGRC workgroup that advanced this recommendation.)

–

WINNER: THE SHERIFF OF SODIUM.

While perusing the references for Recommendation #18, I was a bit surprised to see a link to an old Sheriff of Sodium post mixed among the references to authoritative academic journals.

Let’s just say this citation reflected a very selective reading of my bibliography as it relates to the NBOME… but I appreciated the credit nonetheless.

–

LOSER: THE UNMATCHED.

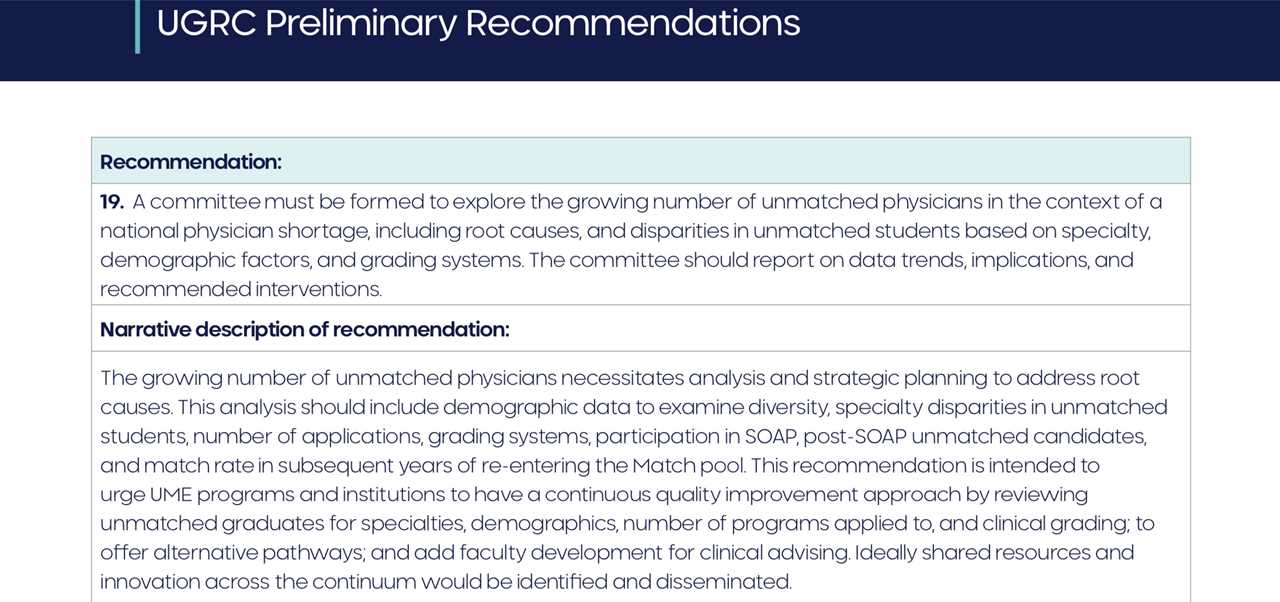

The preliminary report from the UGRC included 42 recommendations; the final report, just 34. Among the specific recommendations left on the cutting room floor was the old #19, which called for studying the unmatched.

Sure, the final report still notes the need to “explore the growing number of unmatched physicians in the context of a national physician shortage” in recommendation #2, but you can’t escape the feeling that unmatched doctors are de-emphasized in the final report. (Other preliminary recommendations that seem de-emphasized include the previously enthusiastic support for an early result acceptance program, which follows a letter from the NRMP noting that they would not pursue such a program this year.)

–

WINNER: STUDENT LOAN LENDERS.

For many students, transitioning to residency is a period of serious financial stress. The student loans that were disbursed in August are long gone… but the first paycheck of residency may not come till mid-July or even the first of August.

To address this important issue, the UGRC brings us Recommendation #33, which calls for funding these predictable expenses “through equitable low interest student loans.”

Which, I guess, is one way to do it.

Of course, another way would be for programs to subsidize this transition. You know, insofar as residents are revenue generators for the hospital well beyond the value that they are compensated. (n.b., if anyone tries to use selective accounting to argue otherwise, simply ask them why some keen-eyed hospital administrator hasn’t cut the residency program if it’s such a money loser.)

Nope, says the UGRC. “These costs should not be incurred by GME programs,” going as far as to make a specific enjoinder against even offering sign-on bonuses.

Why go there? If a program chose to help with moving expenses or give a small signing bonus – possibly because they realized that doing so was a more effective way of recruiting under-represented minority applicants than giving them a lanyard or can koozy with the hospital logo – why stop them?

(The only reason I can think of is that if some programs started doing this, others might feel compelled to do it, too. Better for everyone to link arms, hold the line, and make less affluent residents take on more loans.)

–

WINNER: INTERVIEW CAPS.

Recommendation #24 calls for limiting the number of interviews that an individual applicant can accept.

When 12% of residency applicants consume half of all interview spots in specialties like internal medicine and general surgery, this is low hanging fruit.

(My only criticism is that the proposal for specialty-specific limitations on interviews is unnecessarily complicated in a world when many applicants apply to multiple specialties – and may also have the unintended consequence of encouraging some well-qualified-but-nervous applicants to double apply.)

–

WINNER: TRANSPARENCY.

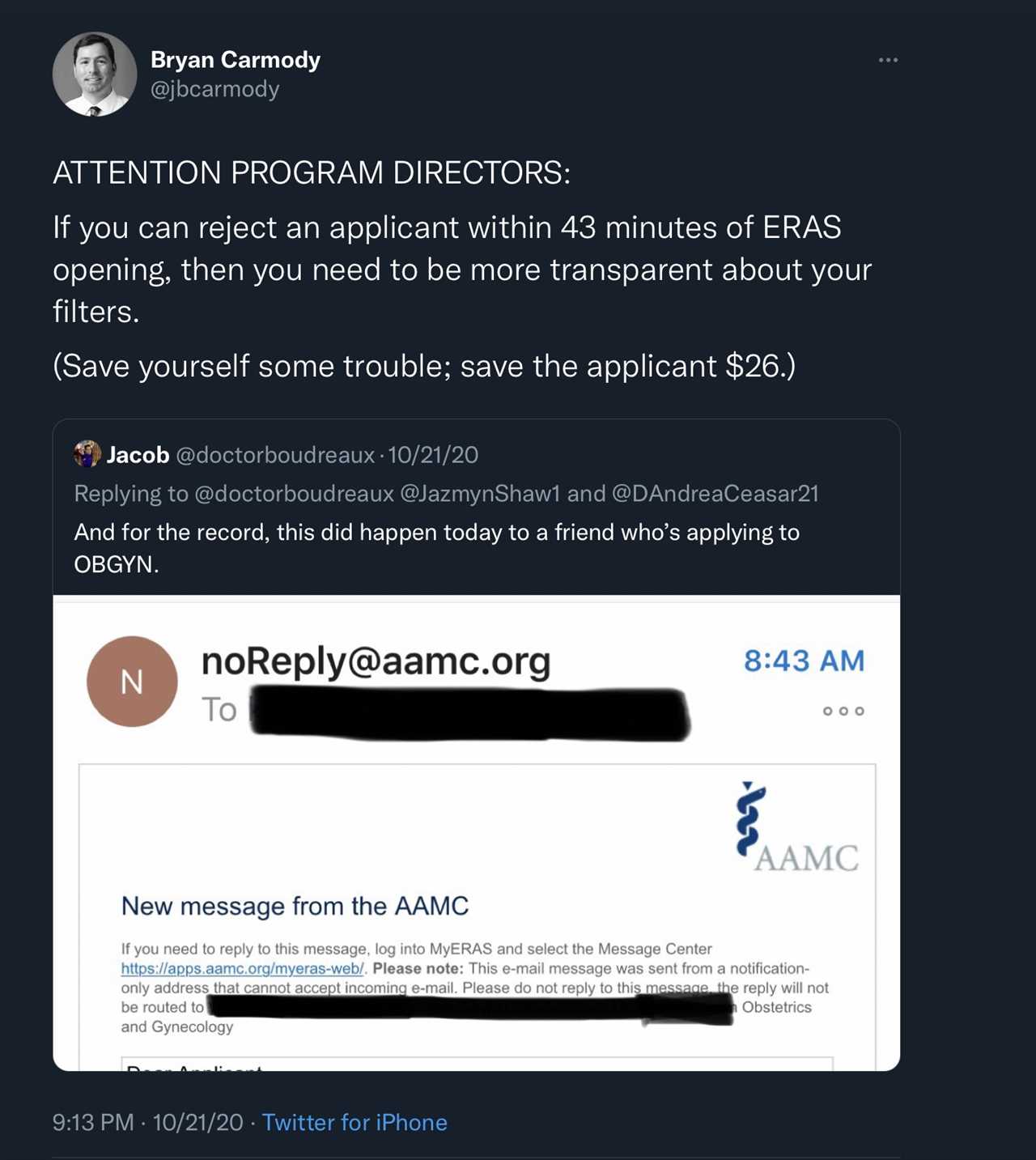

Recommendation #6 calls for a verified database of programs that will include “aggregate characteristics of individuals who previously applied to, interviewed at, were ranked by, and matched for each GME program.”

If this is implemented – and I have doubts it can be practically accomplished while maintaining the confidentiality of residents in the program – it would be a big victory for applicants, who may spend thousands of dollars applying to programs who never even read their application.

–

WINNER: DOXIMITY RESIDENCY RANKINGS.

Transparency about what kinds of applicants match at each program doesn’t just help applicants – it will be a data gold mine for Doximity Residency Navigator or any other company that purports to produce an ordinal list of residency programs by their quality.

Whatever data get included in the UGMC’s “interactive database” will be scraped by bots and used to judge the quality of the program (as if all residency programs were engaged in a direct competition amongst each other for a single goal). Look for more foolish metric-chasing to follow as programs try to make their reported data look good lest the rankings find them unworthy.

–

WINNER: CHEAP INTERN LABOR.

In my original post, I pointed out the somewhat head-scratching preliminary recommendation calling upon the Centers for Medicare and Medicaid Services (CMS) to change the way that they fund residency positions (so that a resident could change specialties and repeat his or her internship without exhausting the CMS subsidy that the program receives). Gotta say, I was disappointed to see this recommendation persist in the final report (now at #3).

I get it: some residents realize only during their internship that they’ve made a horrible mistake with their career choice and need a mulligan.

But to the extent that this problem occurs, does it reflect a failure of GME funding – or a failure of the medical school to provide adequate exposure to that specialty before someone spends a year of 80-hour workweeks doing something that they don’t want to do?

What’s most perplexing is assertion that this move will “lead to improved resident well-being and positive effects on the physician workforce.” Buddy, lemme just stop you right there – no one’s well-being is going to be improved by doing an unnecessary internship. Seems more like a way to turn career uncertainty into extra cheap intern labor.

Instead of “Try this internship! If you don’t like it, you can choose another one next year!” we should instead push medical schools to prevent this problem by abbreviating the preclinical curriculum, pushing core clerkships earlier, and giving students more opportunity to explore careers before application season rolls around.

–

LOSER: CHECKED-OUT FOURTH YEARS.

We’ve all kinda come to an agreement that the fourth year of medical school doesn’t pack same kind of educational calories as the first three years. For better or worse, fourth year has become a mixture of application season and rest/recovery before residency. Though there are exceptions, most students don’t receive educational value commensurate with the tuition they pay.

But Recommendation #30 calls for “meaningful assessment” after the Medical School Performance Evaluation (MSPE) is submitted in September, so that a individualized learning plan can be submitted to the student’s residency program prior to the start of residency.

That’s fine, as far as it goes – but it was the description of what that assessment might be that really made the hair on the back of my neck stand on end:

This assessment might occur in the authentic workplace and based on direct observation or might be accomplished as an Objective Structured Clinical Skills Exam using simulation.

If this were a WWE pay per view event, the announcers would be shouting, “Wait!!! It can’t be!! That’s the NBME’s music!!!” as the new, rejuvenated Step 2 CS exam strides into the arena through a cloud of fog. Maybe I’m being paranoid… but I just can’t believe Step 2 CS is gone for good, despite carefully-worded assertions to the contrary.

–

WINNER: APPLICATION CAPS.

I mocked the preliminary recommendations for repeatedly and correctly noting that Application Fever is the root of much of what ails the UME-GME transition – but failing to acknowledge the most logical solution. But now, in response to feedback received during the period of public comment, I am pleased to report that the final recommendations now includes the words “application caps.”

I’m telling you, momentum is building for application caps. Go right ahead and hop on the bandwagon. Plenty of room on board.

–

WINNER: INNOVATION.

Recommendation #23 calls for more innovation, which is hardly controversial or noteworthy. But what’s interesting is that the committee actually recommends some parameters for success.

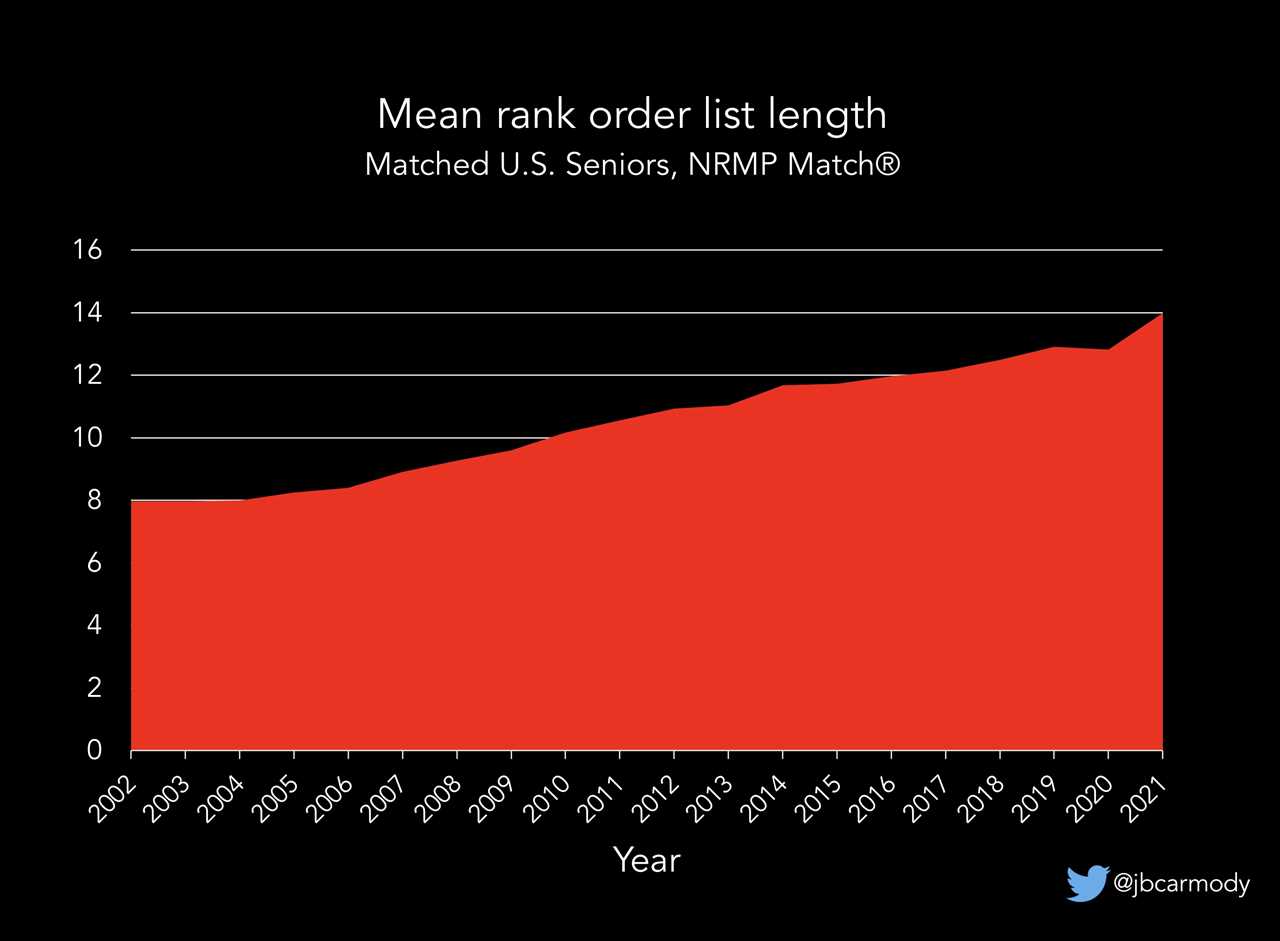

Appendix C notes that goals could include a 20% reduction in applications submitted per position (i.e., from 132 to 102, or back to 2010 levels), or that we should stabilize the fraction of applicants who match outside their top three ranks (a fraction that has been steadily rising each year). And innovation should be allowed so long as the Match has matching rates of +/- 2% from 2020 Match rates (when they were 94% for US seniors, 91% for DOs, and 61% for IMGs).

And maybe this is what I find most encouraging about the otherwise-tepid UGRC recommendations. It does feel like there is some momentum building for real, practical change. More and more people are recognizing the problems and starting to think, at least, about ways of doing things other than how they’ve always been done, and we’re starting to set some parameters for how those changes might occur. These recommendations won’t get us where we want to go – but they build upon and keep those conversations going, and have to be considered a step in the right direction.

(Hey, I’m a glass-half-full kind of guy.)

Dr. Carmody is a pediatric nephrologist and medical educator at Eastern Virginia Medical School. This article originally appeared on The Sheriff of Sodium here.

------------------------------------

By: Christina Liu

Title: Recommendations From the Coalition for Physician Accountability’s UME-to-GME Review Committee: Winners & Losers Edition

Sourced From: thehealthcareblog.com/blog/2021/09/17/recommendations-from-the-coalition-for-physician-accountabilitys-ume-to-gme-review-committee-winners-losers-edition/

Published Date: Fri, 17 Sep 2021 15:19:29 +0000